Who’s reading?

Through this blog, we hope to help the layman understand how their user interaction can be used in a positive manner, while data engineers and aspirants in the field can be inspired by how the idea can be implemented in their own ways. We had fun working on this project, and we hope you have fun too!

Information is Money

You may not realise it, but your interaction today with a single website leaves a trail of breadcrumbs and can be a treasure trove of data for businesses. If that sounds alarming, don't worry.

The data aggregated by most websites isn't private or meant to target you. Companies today go out of their way to better understand their demographic's interests and behavior, provide better user experience, and stay in the competition. The objective is mainly to understand a general trend of user interactions and behaviour on a particular platform.

The next time you're on a website, pay attention to the user experience. You might notice some websites providing a more dynamic set of content than others. Your information makes this possible.

Cognitive analysis is an easy way for companies to personalize and customize experiences by accessing that 'treasure trove of data'. Cognitive Analysis has also found its way to the payment industry, where we are able to solve a different line of problems.

With the increase in data breach and other security issues, it became important for Payment companies to maintain a security standard to safeguard customer's personal data. With this thought, our industry was introduced to compliances- a list of rules to be followed for different eligibilities.

We at PayGlocal, take these compliances very seriously, while also following our own best practices to ensure minimum to zero risk on our platform. At PayGlocal, we carry out Defense in Depth- involving multiple security practices at each layer of the application, ensuring a holistic defense against threats. From Edge defense at the network layer, and API Gateway at the Application Layer, to the extensive encryption of data in transit, in use, and at rest in the DB Layer- it's safe to say our application practices a Zero Trust Architecture.

Apart from the secure handling of in-transit and stored data, we also have a system in place for fraudster identification through data analytics.

Fintech vs the Fraudsters

Digitalisation of the industry has revolutionised the way we pay. A merchant today can accept payments from customers around the world- of different countries and currencies. Customers, on the other hand, can complete payments in the click of a button. However, along with the speed, convenience and the sheer volume of digital payments, the merchants were also forced to welcome a steep increase in fraudsters.

Introducing Synapse

As a part of our Defense in Depth strategy, we have implemented our own layer of protection through an in-house technology to secure payments by identifying fraudster activity. Inspired by clickstream, we have developed our own framework to better use the user’s interaction online. Synapse can be described as a roadmap of a user’s activity. The streamed-clicks and other behavioural aspects of the user’s interaction on the web browser, like-

- if they left the tab,

- if they entered data into the page,

- if they tried close the window, etc.

is used for a behavioural analysis; to help us identify behavioural patterns and habits of our users, and in turn help us identify fraudsters through suspicious activity (such as pasting text instead of typing, running scripts, leaving the tab, etc).

- Identify fraudsters from suspicious activity

- Improve User experience by understanding consumer’s pain points

- Catch system errors if any

How are we doing this?

Let’s get into the technicalities of what is happening behind the screens.

The main objective is to stream the user’s behavioural data into the system to analyse and identify suspicious activity. From a technical standpoint, there are some implicit objectives and best practices to be implemented-

- The data must be displayed in real-time.

- The data must be stored in an organised manner for future analysis.

- Data must be securely transferred over the browser and safe from vulnerabilities and attacks.

- The Synapse system must not affect any of the essential payment activities.

- If any of the data fails to be sent, there must be backed up logs for the same.

- Data must be available as a quick view, detailed view and aggregates

Now let us go over how we can achieve each of these requirements.

Basic Architecture

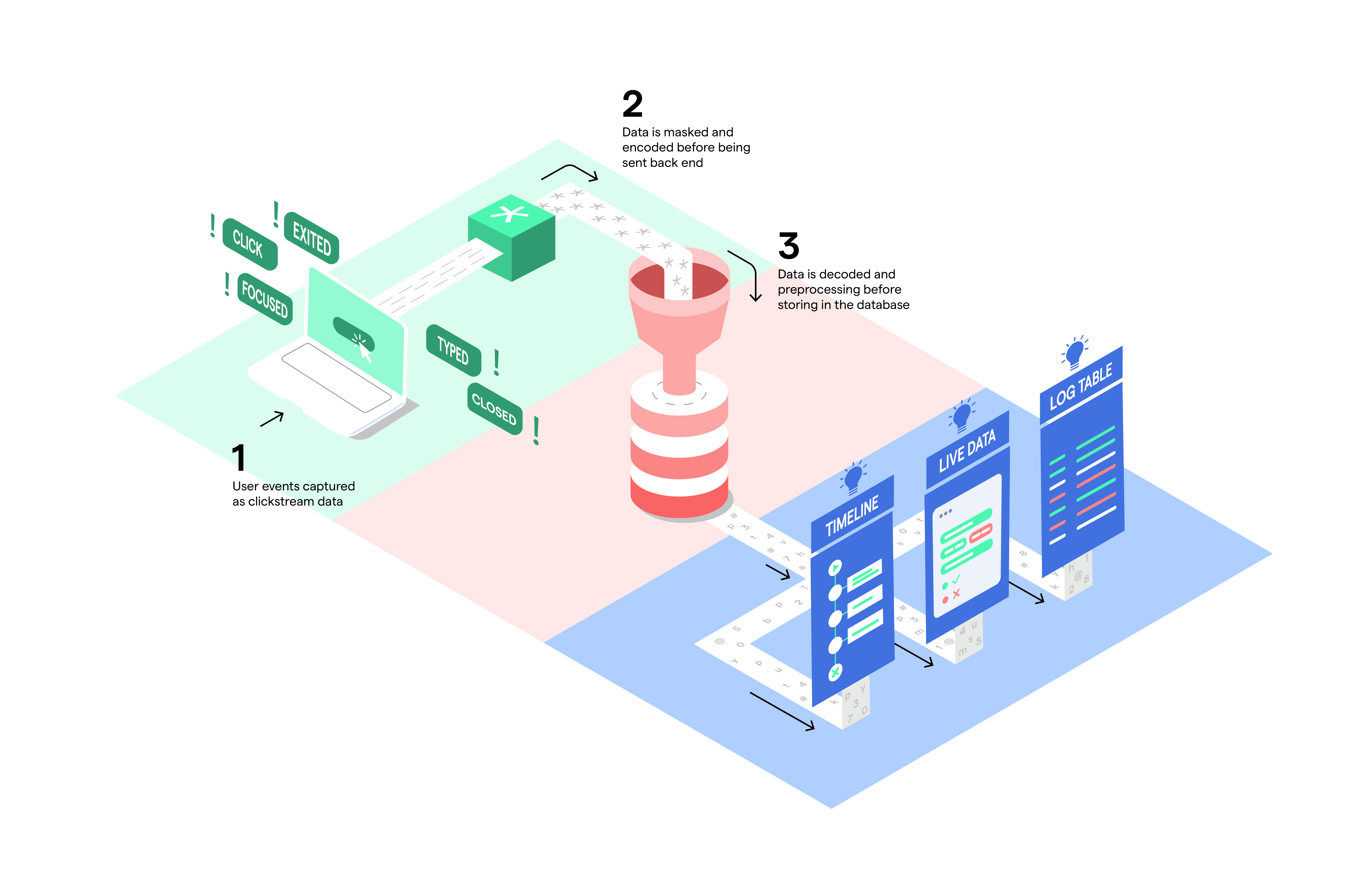

Shown above is a simple depiction of our Synapse system architecture. We begin by streaming user events on the front end with the help of our well-known event handlers. The masked sensitive data and other event logs undergo encodingbefore being sent over to the back end. The data is thenqueued for pre-processing. During the pre-processing, it is decoded, categorized, aggregated and dynamically partitioned for storage in the database.

The data finally makes its way to our analytics platforms where we can make use of it.

Achieving Real-time data

The tricky thing about pulling off real-time data is that it is not just one best practice that would do the job. The system needs to be optimal at every stage of architecture.

- Dynamic Partitioning. We continuously segregate our data streams by using a partition key provided within data. This dynamically partitioned data is stored in separate files in the database, making it easier to run high-performance, and cost-efficient analytics. Partitioning your data minimizes the amount of data scanned, optimizes performance, and reduces the costs of your analytics queries, while also increasing granular access to your data- making it a good practice and solution for real-time data.

- Easy Read and Write Storage. To ensure the system can provide data in real-time, it was important to choose a storage service that could give us fast performance in terms of read and write operations.

Batching

During a single transaction, we expect an ample number of Synapse logs to be sent from the client’s system. A few things were observed-

- Each call that takes place undergoes a series of steps- a TLS connection is initiated, for this the client creates a session key, which contributes to an increase in CPU cycles and hence, more CPU utilisation.

- In addition to this, provided the fact that a majority of these transactions will be taking place internationally, the calculated network overhead is substantial.

- It was also observed that a significant number of calls (particularly those with metadata and successful setup info) tend to occur almost simultaneously, within milliseconds of one another.

Batching of logs was a solid use case for this problem statement.

A batch could either be sent out after fulfilling a threshold of number of records or duration of time. It was clear that using duration as a threshold was more viable as the Synapse logs weren’t periodic in nature and latency needed to be at minimum. Again, due to the erratic nature of the logs, instead of having a static threshold for duration, we decided to have the batches sent out based on time since last push to the batch. For example, if we set it to 2s- if the batch hasn’t received a new log for the past two seconds, the batched logs will be sent and cleared for the next set of logs.

Batching of logs was a solid use case for this problem statement.

Security Practices

A system is only as good as its security measures. While building this clickstream system, it was our responsibility to ensure zero vulnerabilities.

Right off the bat, from the architecture diagram, you will notice a few security measures being implemented. Let us discuss them-

- Masking. Sensitive data is masked at the very beginning of the data’s journey, even before leaving the client’s device, in the front end. This means that the user’s sensitive data will not be viewable at any point, be it over the network, or in the database.

- Encoding. Not only is the data base64 encoded, but we also make use of our own secret codes to transfer the event information. Much like HTTP status codes, we have a set of pre-defined Log Codes that convey all the required information regarding the event and transaction type.

- Auth system. Synapse has an authentication system in place, ensuring that no signal enters unauthenticated. Much like the transaction itself, it is ensured that the access to the resources used by the Synapse system on the client’s is restricted.

- Rate Limiting. An age old practice to prevent DoS attacks. In the scenario of a malicious user getting access to the Synapse resources through the temporary credentials, our system is protected from an overflow of data through this handy technique.

System Availability

In the event that the Synapse log calls are failing, the system makes sure of two things-

- The payment functionality is not being affected by non-essential Synapse activity.

- Logs are being backed up to a more reliable service.

In fact, the back up logs are executed at all points in the system that are capable of producing an error. This is a healthy practice that ensures all information for debugging is readily available, and a root cause analysis can be executed efficiently.

Analytics

With the data safely entering our system at a minimal delay, it was time to put it to good use. The streamed data, after undergoing transformation in our intelligent models, is presented to our Ops team for further analysis. The team then picks up on alerts and suspicious activity and takes the required actions to secure the system.

With a large amount of data coming in from Synapse, it was important to provide apt views of the same for the operations team. We introduced three views for Synapse data-

- Transaction Timeline (or the quick view). The transaction timeline or journey provides a general idea of how the transaction progressed. It contains the more prominent events that took place during the transaction and is colour coded for quick identification.

- The detailed view. This contains all the Synapse logs, with comprehensive categorization and filtering for more convenient and efficient debugging.

- Aggregates. Last but not least, Synapse aggregates contribute largely to the analytics dashboard as it is able to provide many granular details.

All in all, incorporating the concept of Synapse has greatly helped us in identifying malicious activity, understanding our transactions better, capturing potential flaws in our application, and kicking-off our cognitive analytics journey. We are just getting started and have a lot more in mind for future phases of Synapse. We hope to introduce edge-computing, web sockets, execute persona identification, and open these features to more businesses.